Memristor-Based Accelerators: The Next Frontier in AI Hardware

- jenniferg17

- Jul 31

- 3 min read

Updated: Aug 7

Read Below:

Breakthrough Efficiency: Memristor-based accelerators deliver up to 2,446× energy efficiency, reported in PUMA accelerator designs, over traditional GPUs by merging memory and computation.

Edge-Ready AI Hardware: Compact, low-power and scalable hardware, ideal for wearables, drones and remote sensors.

Local Enablement by McKinsey Electronics: Supplying cutting-edge components and support across Africa to drive next-gen AI adoption.

As AI workloads grow exponentially in complexity and scale, traditional computing architectures are reaching their physical and operational limits. The industry is actively exploring unconventional computing models that can deliver breakthroughs in performance and energy efficiency. One such technology stands out: memristor-based accelerators.

What Are Memristors?

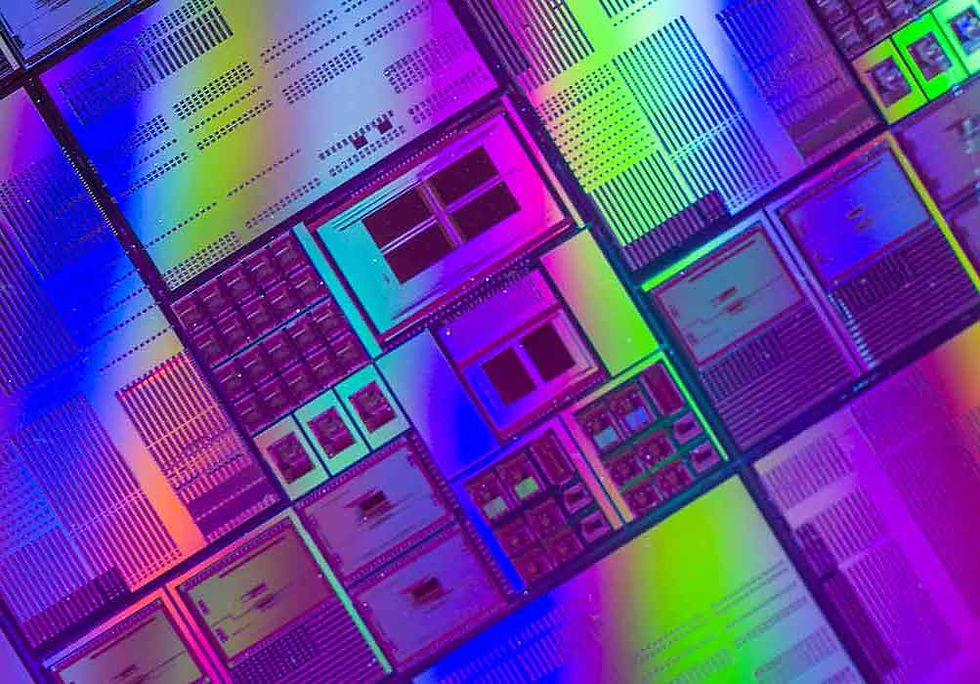

Short for memory resistors, memristors are passive two-terminal circuit elements capable of remembering the amount of charge that has passed through them. This allows them to store information without power (non-volatility) and conduct data-dependent resistance changes, mimicking the behavior of biological synapses. Their unique capability to perform computation and storage in the same location opens up new architectures for in-memory computing and neuromorphic systems.

Key Advantages of Memristor-Based Accelerators

1. Energy Efficiency

Traditional von Neumann architectures suffer from the so-called "memory wall", a bottleneck caused by the physical separation between memory and processing units. Memristor-based systems eliminate this inefficiency by integrating both in a single array.

Recent advances, such as the PUMA (Programmable Ultra-efficient Memristor-based Accelerator) architecture, have demonstrated:

Up to 2,446× energy efficiency

Up to 66× latency improvement compared to high-performance GPUs like the NVIDIA Tesla V100.

The chart below illustrates the relative energy efficiency of different AI hardware platforms (350x for generalized accelerator gains) based on recent peer-reviewed data:

2. In-Memory Computing

Memristors enable processing-in-memory (PIM), allowing computations to occur directly within memory arrays. This not only reduces data movement but also drastically cuts down on energy and time costs associated with memory access.

In benchmarked binarized neural networks, memristor arrays have demonstrated significant throughput gains and power efficiency, enabling real-time AI inference at the edge, even in solar-powered devices.

3. Scalability and Fabrication

Memristors are inherently nanoscale and compatible with CMOS back-end-of-line (BEOL) processes. Their 3D stackability allows for high-density integration, making them highly suitable for embedded and compact AI hardware. This facilitates broader adoption in edge applications such as drones, wearables and mobile robotics.

Recent Innovations

Bayesian Memristor Machines: Enabling energy-efficient probabilistic AI computation, especially for tasks like gesture recognition and medical diagnostics.

Self-Powered AI Sensors: Memristor arrays integrated with solar cells, enabling off-grid, environmental and health-monitoring applications.

Robustness in Extreme Conditions: Demonstrated stability under fluctuating light, temperature and voltage conditions.

Challenges to Overcome

Despite their advantages, several technical challenges remain:

Device Variability: Fabrication inconsistencies can lead to unpredictable behavior.

Endurance: Limited write cycles compared to SRAM/DRAM.

Standardization: Lack of unified models and design tools hinders commercial adoption.

Integration: Requires co-optimization of circuit, architecture and algorithm levels.

Strategic Outlook

Memristor-based AI accelerators are transitioning from academic prototypes to commercial viability. With growing investment in neuromorphic engineering and edge intelligence, these accelerators could redefine the silicon roadmap for AI by 2030.

Semiconductor companies, startups and research consortia are actively collaborating to scale up these innovations. Look out for platforms integrating memristor arrays with RISC-V or custom AI SoCs to dominate the next phase of AI hardware.

Memristor-based accelerators are more than just an academic curiosity, they represent a technological leap toward ultra-efficient, high-performance AI computing. Their convergence of memory and logic, combined with energy and space efficiency, makes them a prime candidate for next-generation AI architectures.

As AI workloads push the boundaries of conventional hardware, McKinsey Electronics is helping engineers across Africa (South and North Africa) transition toward next-generation solutions like memristor-based accelerators. Through our distribution of advanced memory technologies, high-speed analog components and AI-optimized microcontrollers from tier-one manufacturers, we enable R&D teams and system architects to prototype and scale emerging hardware architectures.

Our local presence ensures that innovators across these regions have the tools, components and advisory support needed to explore neuromorphic computing, edge AI acceleration and new memory-centric designs to accelerate their path toward more intelligent, energy-efficient systems.